New tool from Cyabra uses AI to crack down on bots, AI-generated spam

Cyabra's botbusters tool uses machine learning to detect text, images or profiles created via AI

Keane: China's cyberspying is most comprehensive penetration US has ever faced

Fox News senior strategic analyst Gen. Jack Keane (ret.) discusses the Biden administration's handling of Iran and China's cyberespionage campaign against the U.S.

A social threat intelligence company is rolling out a new tool to help detect the presence of bot or spam accounts created with the use of artificial intelligence (AI).

Cyabra, an Israel-based start-up, gained notoriety in 2022 when billionaire Elon Musk enlisted its help in assessing the prevalence of bots and spam accounts on Twitter as his ownership group pursued and completed its acquisition of the social media platform, which is now known as X. Cyabra’s new tool, botbusters.ai, can detect AI-generated texts, images and fake profiles – with video detection capabilities in the works as well.

"We want to be the watchdog for what’s happening out there," Cyabra CEO Dan Brahmy told FOX Business. "Botbusters.ai is our way of allowing people and corporations around the globe to use a free solution that allows them to measure if text or pictures and even profiles have been human-led or have been computer-led."

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

Israeli start-up Cyabra has developed a tool that uses AI to detect bots and spam accounts in addition to text and images that are generated by using AI to further scams or sow disinformation. (Getty Images)

Brahmy said it’s "really very easy" to use AI tools "that allow you to create deepfakes and gags at the speed of light, you know you just push a bunch of buttons" and serve as a weapon for spreading disinformation or perpetuating scams.

He explained that Cyabra’s tools also use a form of AI they call "semi-supervised machine learning" and that botbusters.ai has about 800 machine-learning parameters to detect whether text, images or profiles were created using AI or otherwise engage in bot-like activity.

Brahmy also said that the three most prevalent types of bot activity Cyabra has encountered are corporate impersonations, state-sponsored bots pursuing geopolitical ends, and seemingly random chaos creation that’s intended to distract from things like product launches.

AI MAKES IT EASIER FOR FRAUDSTERS TO CREATE FAKE COMPANIES, HAMPER DUE DILIGENCE: REPORT

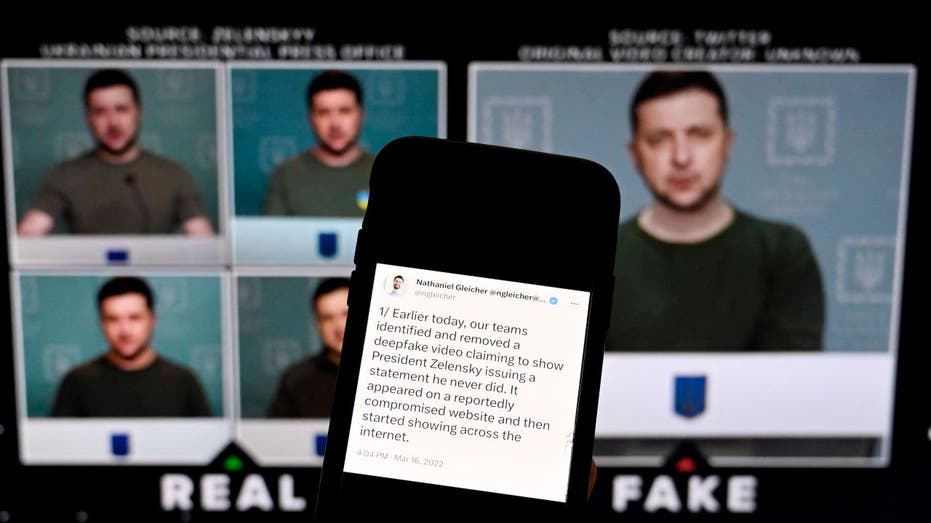

AI has been used by state actors to generate deepfakes to sow confusion about world leaders, including Ukrainian President Volodymyr Zelenskyy, and geopolitical issues. (Olivier Douliery / AFP / File / Getty Images)

Corporations and the executives who run them have also been the target of impersonation scams that leveraged AI tools.

"We are seeing a massive amount of impersonations of executives all the way from a well-known chairwoman to CEOs or to other CXOs on very prominent companies within the maybe Fortune 2000 list," Brahmy said. "I can tell you that it’s something that is so common that I wouldn’t be surprised if it already hit a pretty large portion of these companies."

"If you impersonate the person properly, with the right social media profiles and the right social media identity, then you spread the right content at the right time and the audience gobbles it up properly, that’s it, especially if the rumor is interesting enough, is provocative enough," he added.

PENTAGON TURNS TO AI TO HELP DETECT DEEPFAKES

Brahmy said that state actors, or foreign governments, are common perpetrators of bot activity in an effort to spread disinformation or propaganda by using bots.

"A lot of geopolitical storms that we see both in the Asia-Pacific, a little bit in Europe, and then of course, a lot in the United States," Brahmy said. "[There’s] a lot because the stakes are extremely high whether they’re related to the political side of the aisle, all those soon-to-be elections or recent elections – that’s a good enough reason, and it’s a cycle on a geopolitical level."

NEW US TECH RESTRICTIONS ON CHINA HAVE INVESTORS WARY, WATCHING FOR RETALIATION

Cyabra's botbusters.ai tool uses machine learning to detect AI-generated text, images and profiles. (iStock / iStock)

While impersonation scams targeting corporate executives have gained a significant amount of attention, some fraudsters have deployed AI tools to target lower-level workers in an effort to gain access to privileged information or data. Others have impersonated companies’ customer service representatives to go after customers who are trying to deal with an issue.

Scammers have also impersonated corporate social media accounts, including an instance from late 2022 when a fake Eli Lilly account was posted on Twitter and claimed that it was making insulin free, which caused the company’s stock price to plunge during the day’s trading before it became clear the post was fraudulent.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Brahmy noted that bots have been used for seemingly random activities that have an underlying financial incentive, such as distracting social media users from a high-profile product launch, as an example.

"It’s just chaos creation at its best. And we’ve seen that technique being employed also in the public sector space, but it’s pretty common in the corporate space as well, because if you take the eyeballs off those conversations, you are taking consumers away and that’s what hurts the most, especially for the consumer-driven companies," Brahmy said.