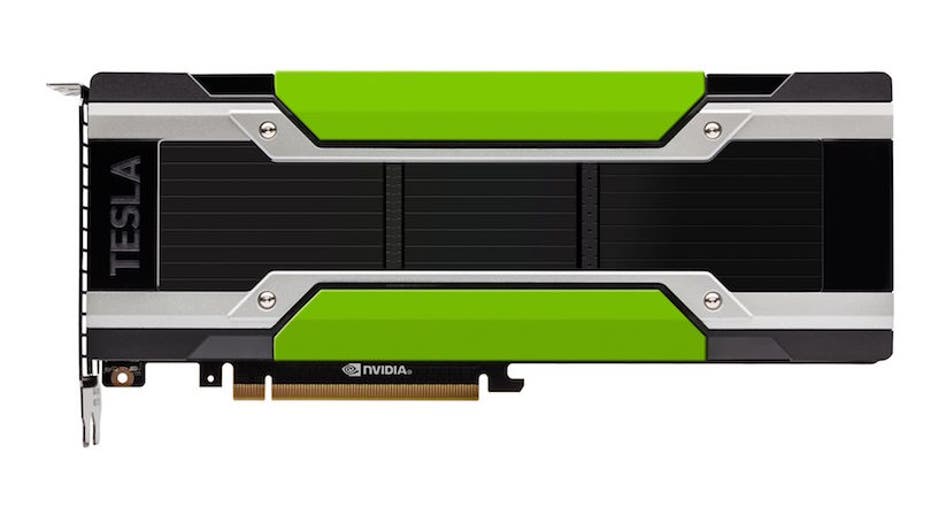

Nvidia's Tesla P100 GPU Gets a PCIe Version

Nvidia's Tesla P100 GPU, capable of replacing dozens of traditional CPU nodes to speed up the processing of complex algorithms, will now be offered in a PCIe form factor, the company announced today.

PCIe makes it easier for the P100 to be installed in the server banks that can benefit most from its ability to accelerate computing tasks, especially the machine-learning algorithms that power artificial intelligence applications. When Nvidia originally announced the P100 in April, CEO Jen-Hsun Huang described his company as "all-in" when it comes to artificial intelligence and virtual reality.

The P100 is based on the Pascal GPU architecture. With support for PCIe Gen 3, which offers a bandwidth of 32GB per second, each P100 processor provides the throughput of more than 32 CPU-based nodes.

Its 18.7 teraflops of half-precision performance, however, means that the PCIe version will take a slight performance hit compared to the original P100, which offers 21 teraflops. That difference won't be much of an issue for massive server banks, but it could be noticeable for smaller installations, such as autonomous car testing.

Non-server farm customers for whom that performance drop presents an issue will likely consider Nvidia's in-house supercomputer offering, the P100-powered DGX-1. Using eight Tesla P100 cores, it delivers 170 teraflops of half-precision peak performance, the equivalent of 250 CPU-based servers.

The first PCIe-based P100s will be available in servers from Dell, HP, IBM, and other companies beginning in fall 2016. One of its first applications will be in Europe's fastest supercomputer, the Piz Daint system at the Swiss National Supercomputing Center in Lugano.

This article originally appeared on PCMag.com.