Self-Driving Cars Are Smart, Just Not Smart Enough

It’s probably been 20 years since I’ve engaged cruise control on a vehicle. Aside from giving your foot a rest during a long road trip, I never really saw the purpose. Besides, for me, driving is fun. And it’s a little scary to give up any amount of control of two tons of metal barreling down a highway at 65 or 70 mph.

On the other hand, low-cost sensor technology has been around forever. It’s hard to believe that every car doesn’t have an active warning system for when we get too close to another car, begin to nod off behind the wheel or have had a little too much to drink. And machines are now smart enough for self-driving systems, like Tesla’s Autopilot.

The question is, are self-driving systems smart enough to risk life and limb? Fully autonomous vehicles are one thing, but when you have both a computer and a human behind the wheel at the same time, there are far too many situations where it’s not clear who or what should be in charge, and when. It’s definitely not a no-brainer.

We’ve all heard about the Tesla Model S crash in Florida last May, which killed the driver. Some reports claim the system couldn’t distinguish the trailer from the sky. The tragedy is of course being investigated, but nobody ever said these systems would be flawless. There will be accidents. There will be fatalities. And proponents say many more lives will be saved.

That said, in many reported accidents where the driver lived to talk about it, there’s been some level of confusion – not just about the performance of the system, but about if and when the driver is supposed to take over from the self-driving system.

The Wall Street Journal recently reported on a non-injury crash between a Model S on Autopilot and a car that was parked on a California Interstate, of all places. The driver said the Autopilot failed to react but, after reviewing the car’s data, Tesla said the driver was at fault for breaking, which disengaged the Autopilot.

“So if you don’t brake, it’s your fault because you weren’t paying attention,” said the driver. “And if you do brake, it’s your fault because you were driving.” She also told the Journal that she does not plan to use the automated driving system again. I think that’s a very smart move.

The problem with most auto accidents is that they usually turn into “he said, she said” arguments over who’s at fault. Now you can add “car company said” to the confusion.

Maybe drivers need more education. Maybe self-driving systems need a few more software updates. Maybe there’s a learning curve … for us and the machines. Regardless, it seems clear to me that we’ve travelled too far, too fast into the all-too-uncertain domain of semi-autonomous vehicles.

Don’t get me wrong. In the not too distant future -- sooner than you expect – there will be self-driving cars. Lots and lots of self-driving cars. The technology is cost-effective, the regulations are being hammered out, the business case is overwhelming and that makes it inevitable.

Google has been testing its own cars for years and currently has a deal with Chrysler to build a minivan based on the search giant’s technology. Apple, Uber, Tesla, practically every major automaker and a host of startups are all racing, on their own or in tandem, to disrupt a global market for vehicles and services valued in excess of $5 trillion.

And while I’m not planning to give up my top-down sports car driving privileges anytime soon, I’m happy to be the first one to hop in a computer-driven Uber after a fun night of drinking and dining, at least after the technology has improved to the point where it can distinguish a parked car from air or a white truck from a bright sky.

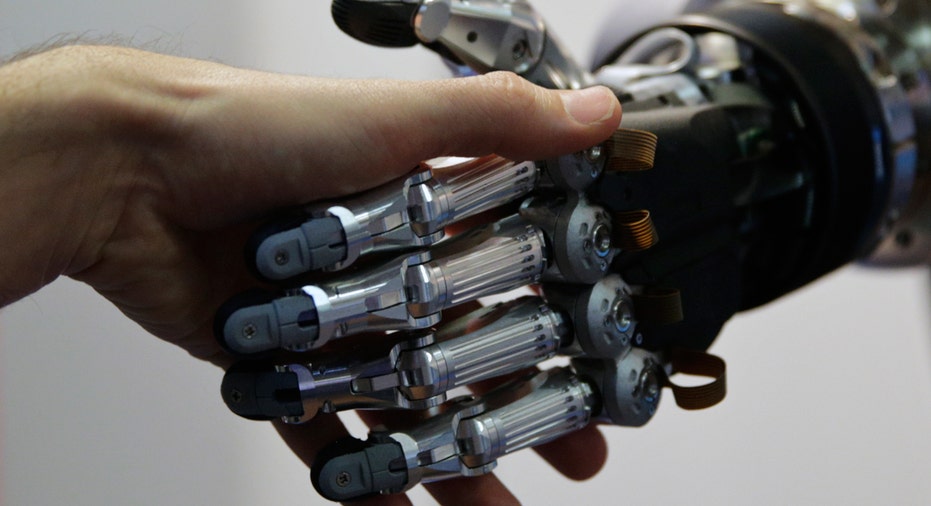

Until then, I’m going to pass on self-driving systems. While I’m a big fan of sensors and warning buzzers, I feel the same way about semi-autonomous systems as I do about cruise control. For me, there just seems to be too much uncertainty and unnecessary risk in the gray area between man and machine.