Facebook parent Meta touts Artificial Intelligence robot that can learn from humans

Meta said that its robot was able to rearrange objects inside of a lab and apartment

Meta's AI Robot rearranges a 'variety of objects'

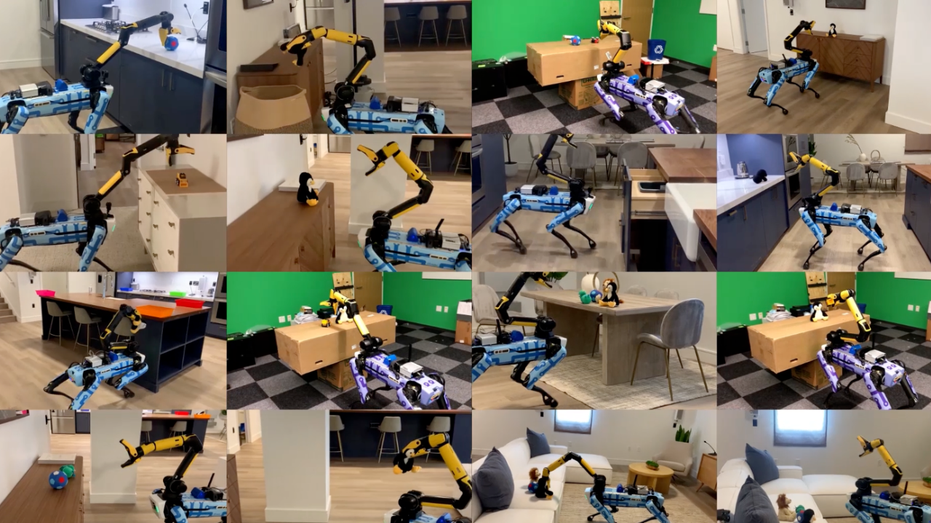

In announcing the second development, Meta's FAIR team says that it has used adaptive (sensorimotor) skill coordination (ASC) on a Boston Dynamics' Spot robot to "rearrange a variety of objects" in a "185-square-meter apartment and a 65-square-meter university lab." (Credit: Meta)

Meta announced two advancements towards developing AI robots that can perform "challenging sensorimotor skills."

In a press release on Friday, the company announced that it has developed a way for robots to learn from interactions from real-world humans "by training a general-purpose visual representation model (an artificial visual cortex) from a large number of egocentric videos."

The videos come from an open source dataset from Meta, which the company says shows people doing everyday tasks such as "going to the grocery store and cooking lunch."

One way that Meta's Facebook AI Research (FAIR) team is working to train the robots is by developing an artificial visual cortex, which in humans, is the region of the brain that enables individuals to convert vision into movement.

TECH EXPERTS SLAM LETTER CALLING FOR AI PAUSE THAT CITED THEIR RESEARCH: 'FEARMONGERING'

Meta announced two advancements towards developing AI robots that can perform "challenging sensorimotor skills." (Meta / Fox News)

The dataset that is used to teach the robots, Ego4D, contains "thousands of hours of wearable camera video" from people participating in the research that perform daily activities such as cooking, sports, cleaning, and crafts.

According to the press release, the FAIR team created "CortexBench," which consists of "17 different sensorimotor tasks in simulation, spanning locomotion, navigation, and dexterous and mobile manipulation."

"The visual environments span from flat infinite planes to tabletop settings to photorealistic 3D scans of real-world indoor spaces," the company says.

AI PAUSE GIVES 'BAD GUYS' TIME TO CATCH UP, BILL ACKMAN SAYS: 'I DON'T THINK WE HAVE A CHOICE'

When used on the Spot robot, Meta says that ASC achieved "near perfect performance" and succeeded on 59 of 60 episodes, being able to overcome "hardware instabilities, picking failures, and adversarial disturbances like moving obstacles or blocked pa (Meta / Fox News)

In announcing the second development, Meta's FAIR team says that it has used adaptive (sensorimotor) skill coordination (ASC) on a Boston Dynamics' Spot robot to "rearrange a variety of objects" in a "185-square-meter apartment and a 65-square-meter university lab."

When used on the Spot robot, Meta says that ASC achieved "near perfect performance" and succeeded on 59 of 60 episodes, being able to overcome "hardware instabilities, picking failures, and adversarial disturbances like moving obstacles or blocked paths."

Video shared by Meta shows the robot moving various objects from one location to another.

CLICK HERE TO READ MORE ON FOX BUSINESS

In announcing the second development, Meta's FAIR team says that it has used adaptive (sensorimotor) skill coordination (ASC) on a Boston Dynamics' Spot robot to "rearrange a variety of objects" in a "185-square-meter apartment and a 65-square-meter (Meta / Fox News)

The FAIR team says it was able to achieve this by teaching the Spot robot to "move around an unseen house, pick up out-of-place objects, and put them in the right location."

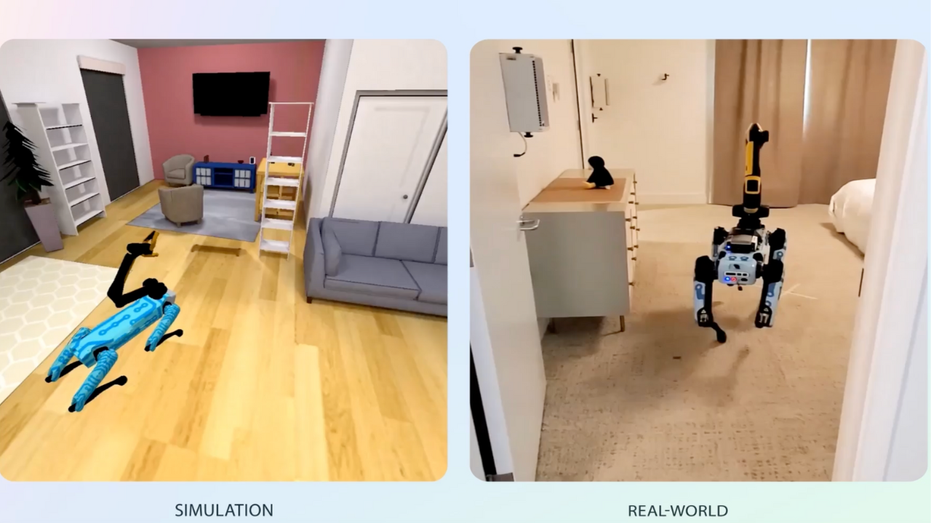

Simulation and real world view of 'Spot' Robot rearranging objects

When tested, the Spot robot used "its learned notion of what houses look like" to complete the task of rearranging objects. Credit: Meta

When tested, the Spot robot used "its learned notion of what houses look like" to complete the task of rearranging objects.