Google's AI Chip Could Be a Threat to NVIDIA

Image Source: NVIDIA.

Graphics chip company NVIDIA has had great success selling its products to enterprise customers. During NVIDIA's latest quarter, the company's data center segment generated $143 million of revenue, up 63% year over year. GPUs are far more efficient than CPUs for certain tasks, leading all of the major cloud computing companies to adopt NVIDIA's products.

Deep learning, where software is trained with data to perform a certain task, like image recognition or natural language analysis, is one application where graphics cards perform well. Alphabet's Google, Microsoft, Amazon, and IBM are all actively employing deep learning, and NVIDIA's Tesla graphics cards are being used to accelerate the process.

While NVIDIA has been successful so far, a recent announcement from Google could create a major problem for NVIDIA's data center business going forward.

Google is designing its own chips

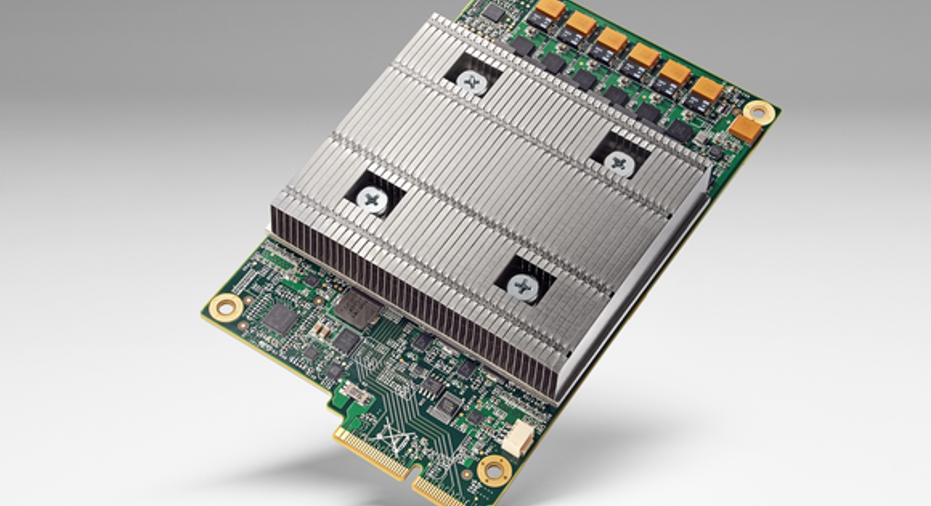

Tensor Processing Unit board. Image source: Google.

At Google's recent I/O conference, the company announced it had developed a custom processor designed specifically for deep learning tasks. The Tensor Processing Unit, or TPU, is an application-specific-integrated-circuit designed to accelerate Google's TensorFlow machine learning software. Google has been running TPUs in its data centers for more than a year, and the company has found that these processors provide an order-of-magnitude improvement in performance per watt. The company boasted that its TPUs effectively push chip technology seven years into the future.

Few concrete details were disclosed about Google's TPUs. Norman Jouppi, a distinguished hardware engineer at Google, told The Wall Street Journal that Google currently uses more than 1,000 of the chips in its data centers. According to Google, over 100 of its products currently use deep learning, with TPUs already powering components of the company's search algorithm and the StreetView feature of Google Maps. AlphaGo, Google's computer program that beat a professional Go player earlier this year, was also powered by TPUs.

While GPUs are more efficient than CPUs for deep learning tasks, a processor designed specifically for deep learning will be even more efficient. Ultimately, GPUs are designed for the kinds of calculations required for processing and displaying 3D graphics. Deep learning works well with NVIDIA's graphics architecture, but a chip designed at the hardware level specifically for deep learning should be far superior. If Google has truly managed a tenfold improvement in performance per watt compared to all other alternatives, NVIDIA has a serious problem.

One good example that illustrates this issue is cryptocurrency mining. In the early days of bitcoin, standard PCs could effectively mine the virtual currency in a profitable way. But bitcoin mining becomes more computationally intensive over time by design, so GPUs eventually began to be used, as they were more efficient than CPUs. But GPUs were inefficient compared to ASICs designed specifically for bitcoin mining, and they eventually became infeasible as well.

Deep learning may be following a similar path. Google is large enough and employs deep learning broadly enough that designing its own chip made sense. TPUs aren't going to replace Intel's server chips since they are accelerators, not general-purpose processors, but it may be a different story for NVIDIA.

Google plans to make TPUs accessible through its cloud computing platform, which could give the company an advantage over Amazon's AWS and Microsoft's Azure. Microsoft has also been experimenting with alternatives to GPUs, with the company using field-programmable-gate-arrays to accelerate its Bing search product.

One thing that we don't know is whether Google is using its TPUs for both training and executing its deep learning algorithms. The training process is far more computationally intensive, and it's unclear whether the TPUs are designed for training as well as execution. Jouppi, in an interview with EE Times, would not comment on whether TPUs handle training. If they don't, that means NVIDIA's GPUs are likely still being used for that purpose.

There are still a lot of unknowns surrounding Google's TPUs, and it's too early to say how much of an effect they will have on NVIDIA's data center business. In any case, the trend toward specialized processors for deep learning is not a positive development for the graphics chip company.

The article Google's AI Chip Could Be a Threat to NVIDIA originally appeared on Fool.com.

Suzanne Frey, an executive at Alphabet, is a member of The Motley Fool's board of directors. Timothy Green owns shares of International Business Machines. The Motley Fool owns shares of and recommends Alphabet (A and C shares), Amazon.com, and NVIDIA. The Motley Fool owns shares of Microsoft. Try any of our Foolish newsletter services free for 30 days. We Fools may not all hold the same opinions, but we all believe that considering a diverse range of insights makes us better investors. The Motley Fool has a disclosure policy.

Copyright 1995 - 2016 The Motley Fool, LLC. All rights reserved. The Motley Fool has a disclosure policy.