Why Facebook Is Doubling Down on Its AI

Imagine the computing power necessary to handle the activity of 1.23 billion usersplaying 100 million hours of video and uploading 95 million posts of photos and videos. Facebook, Inc. (NASDAQ: FB) processes that and more every day. Now imagine sifting through all that data to perform facial recognition, describe the contents of photos and video, and populate your news feed with relevant content. Those tasks are all handled by Facebook's servers that have been infused with its homegrown brand of artificial intelligence (AI).

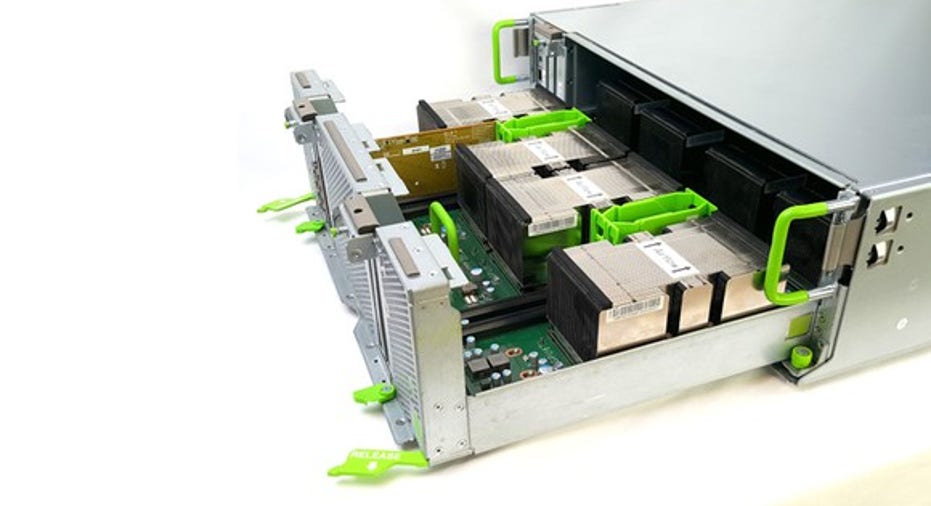

The training that takes place behind the scenes has been the job of Facebook's AI brain named Big Sur, which has been handling the task since 2015. Now, the system that has been at the heart of the company's AI activity for the last two years is being replaced by a newer, faster model. Facebook revealed in a blog post that its successor is dubbed Big Basin, and this new platform boasts some impressive credentials. The upgraded AI server can train machine-learning models that are 30% larger in about half the time.

Facebook data center in Prineville, Oregon. Image source: Facebook.

If you've used Facebook, you've used AI

Machine learning is a combination of software models and algorithms that sort through data at lightning speeds to make deductions based on what it finds. This might sound somewhat futuristic, but this AI technology is being used today. An example would be running facial recognition and comparing a newly uploaded photo with all the photos in its database to suggest a name when tagging a friend in the picture. "If you've logged into Facebook, it's very likely you've used some type of AI system we've been developing," according to Kevin Lee, a technical program manager at Facebook and author of the blog.

Lee detailed that the new system was built using graphics processing units (GPUs) from NVIDIA Corporation (NASDAQ: NVDA) to achieve this new level of AI computing. The platform hosts eight Tesla P100 GPU accelerators, which NVIDIA describes as "the most advanced data center GPU ever built." The system also features NVIDIA NVLink, which connects the GPUs in what Facebook describes as a "hybrid mesh cube." The speed of these systems can bog down, caused by a bottleneck at the connections, which the NVLink seeks to prevent. Lee indicated that the architecture was similar NVIDIA's DGX-1 -- its AI supercomputer in a box. Lee quipped that "Big Basin behaves like a JBOD [just a bunch of disks] -- or as we call it, JBOG, just a bunch of GPUs."

More computing power was necessary

Facebook's recent emphasis on live video streaming provided challenges because of how quickly it was adopted by users. Larger and more complex data sets required additional computing power at higher speeds. The company uses AI to not only classify its real-time video but in other applications, such as speech and text translations. This new platform provides the data capacity and speed to train Facebook's next generation of AI models.

Facebook plans to release the design specifications of its AI server via the Open Compute Project in the near future. Once that happens, any ambitious computer engineer with sufficient time and money could conceivably build one of these systems in his or her basement.

Big Basin AI Server can train 30% larger data sets than its predecessor in half the time! Image source: Facebook.

Groundbreaking AI chip

Facebook isn't the only company creating innovative AI applications. Alphabet's (NASDAQ: GOOGL) (NASDAQ: GOOG) Google revealed the details behind its Tensor Processing Unit (TPU), a specialized chip it designed and has been using to power its AI systems -- called deep neural networks -- for the last two years. These machine-learning systems are created using algorithms and software modeled on the human brain, and they learn by analyzing vast quantities of data.

In a Google blog post, hardware engineer Norm Jouppi described the capabilities of the TPU chip, saying that it processed AI workloads 15 to 30 times faster than conventional CPUs and GPUs while achieving a 30 to 80 times reduction in energy consumption. Google realized six years ago that the AI speech-recognition technology that it was deploying to users required the storage of vast amounts of data, and worried that it would have to double the number of data centers just to keep up. Google credits the development of TPU for improvements in its Translate, Image Search, and Photos programs, and for its victory over world's best Go players.

Final thoughts

It would be difficult to quantify the value these innovations bring to the companies that develop them, and even more so since they are being shared with the world. Very few of the companies involved in AI research reveal any direct financial benefit, though the advances make their products more useful to consumers, as the resulting technologies affect the things we do and the products we use every day.

10 stocks we like better than FacebookWhen investing geniuses David and Tom Gardner have a stock tip, it can pay to listen. After all, the newsletter they have run for over a decade, Motley Fool Stock Advisor, has tripled the market.*

David and Tom just revealed what they believe are the 10 best stocks for investors to buy right now... and Facebook wasn't one of them! That's right -- they think these 10 stocks are even better buys.

Click here to learn about these picks!

*Stock Advisor returns as of April 3, 2017

Suzanne Frey, an executive at Alphabet, is a member of The Motley Fool's board of directors. Danny Vena owns shares of Alphabet (A shares) and Facebook. Danny Vena has the following options: long January 2018 $640 calls on Alphabet (C shares) and short January 2018 $650 calls on Alphabet (C shares). The Motley Fool owns shares of and recommends Alphabet (A shares), Alphabet (C shares), Facebook, and Nvidia. The Motley Fool has a disclosure policy.