Adobe unveils new Firefly generative AI tools for creative platforms

Adobe is unveiling the next generation Firefly suite of generative AI tools for its applications

How should investors think about Artificial Intelligence?

‘Barron’s Roundtable’ panelists discuss the evolution of AI and how investors can look for opportunities with the emerging technology.

Adobe on Tuesday unveiled new versions of generative artificial intelligence (AI) tools for its creative platforms at its Adobe MAX conference in Los Angeles.

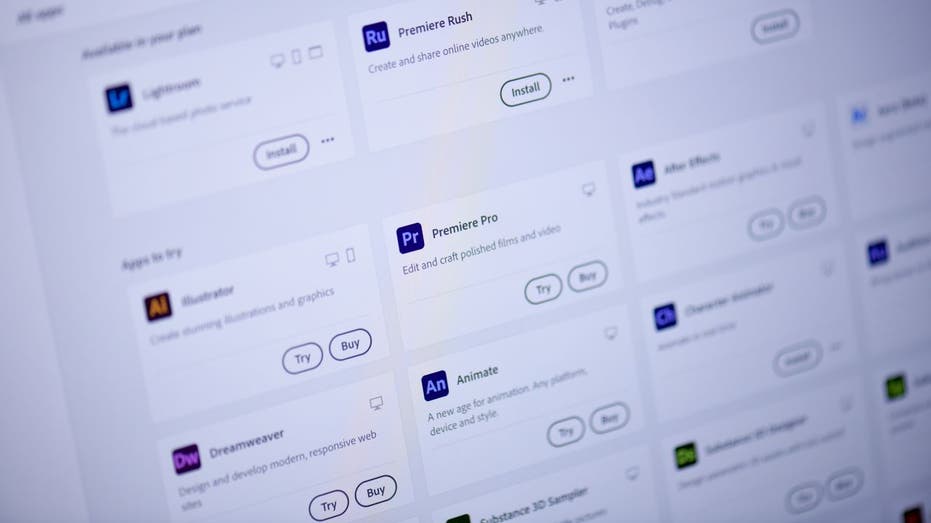

The latest release includes the next-generation Firefly Image 2 model that produces higher-quality imagery than the initial Firefly model, which became generally available last month. Adobe also released a new Firefly Vector Model, which it says is the world’s first generative AI tool focused on producing vector graphics used by designers, as well as a Firefly Design Model that generates template designs and other new features in the Adobe Creative Cloud.

Ely Greenfield, CTO of Adobe’s Digital Media division, told FOX Business that Adobe’s latest updates and integrations of Firefly aim to move beyond the "dice roll" of entering a prompt into an AI-powered image tool that may or may not produce the desired output by giving creators more control over how the AI tool’s output.

"For the bulk of the creative flows out there, we think that being able to really specify with more control what kind of an image you’re looking for and doing that using a combination of prompts and selections and example images and styles is the way of the future for people really leveraging this technology," he explained. "And in order to do that, they have to do that directly in real tools and workflows."

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

Adobe unveiled three new Firefly generative AI models to help creators make content that's safe for commercial use. (Photographer: Gabby Jones/Bloomberg via Getty Images)

One of the upgrades to Firefly’s image generator allows users to incorporate a reference image along with a written prompt to guide the stylistic output from the AI image generator. The prompts and reference images can be used to give outputs a wide range of artistic styles, ranging from watercolors to abstract and photorealistic styles.

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| ADBE | ADOBE INC. | 268.38 | -1.01 | -0.37% |

Creators can also manually adjust photo settings to change the depth of field, add a motion blur and widen or narrow the field of view.

"Being able to get exactly the image you want by describing it using words is a very hard thing to do, no matter how good the models are," Greenfield said. In an example from the presentation, reference images with a more modern look versus a softer, cartoonish motif were used to generate vastly different images of a fluffy sloth toy from the same written prompt.

Adobe is unveiling new generative AI tools integrated into Adobe Creative Cloud applications. (Photographer: Gabby Jones/Bloomberg via Getty Images / Getty Images)

"We’re making more investments in the technology there to make it easier for people to create longer, more complex, more specific prompts that will help them get high-quality output," he explained. "So there is still some value in prompt engineering and we want to make that easier for people to continue to do it even while we’re working to eliminate the need for it."

"To be able to just take an image that I have, you know, that my creatives have created or I’ve created or I’ve licensed from somebody and to be able to provide that to the model and say, ‘Here, make it look like this.’ It’s incredibly empowering," Greenfield said.

"The goal here is for existing creative pros who have the skills to create this content themselves, it’s a massive accelerator for them to be able to just say, ‘I’ve already made an image that has the style that I want, can you please just make more like this?’ And for people like me who don’t have the skills to be able to achieve it on my own… it unlocks workflows that just wouldn’t be available to me otherwise."

ADOBE GENERATIVE AI SUITE FIREFLY NOW AVAILABLE FOR COMMERCIAL USE

Adobe is also previewing Project Stardust at its MAX creative conference this week. (Gabby Jones/Bloomberg via Getty Images / Getty Images)

Creators working with branded assets or other intellectual property will be able to use Firefly in the Adobe suite through extensions within the platform that protect those assets from being used to train foundation models or inadvertently becoming available to unapproved users.

"Firefly is designed to be commercially safe. The foundation model out-of-the-box will not accidentally create licensed content or IP that belongs to a company, so you can feel confident that you can create content that you can use," Greenfield said. "But of course, customers want it to create content that is relevant to them, which means they want it to understand their style, their brand, their IP – so we are developing technology that allows customers to create extensions to be able to use Firefly securely with their own content and their own brands and IP for their own use."

"What they do is they literally are used to train up essentially an extension which is owned entirely and controlled entirely by the company itself… They’re quarantined for their use but they’re not available to anybody else," he added.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Adobe is also previewing what it is calling Project Stardust – an AI-powered image editing tool that allows users to delete items or add elements to an image while maintaining the texture, shadows, and other aspects of a scene.

For example, if a user wants to add an image to a specific part of an image, Stardust allows them to highlight that area, connect to the Firefly API within the platform to generate image options for whatever object a user wants to insert, and then move the object within the image more seamlessly than they would be able to do in Photoshop.